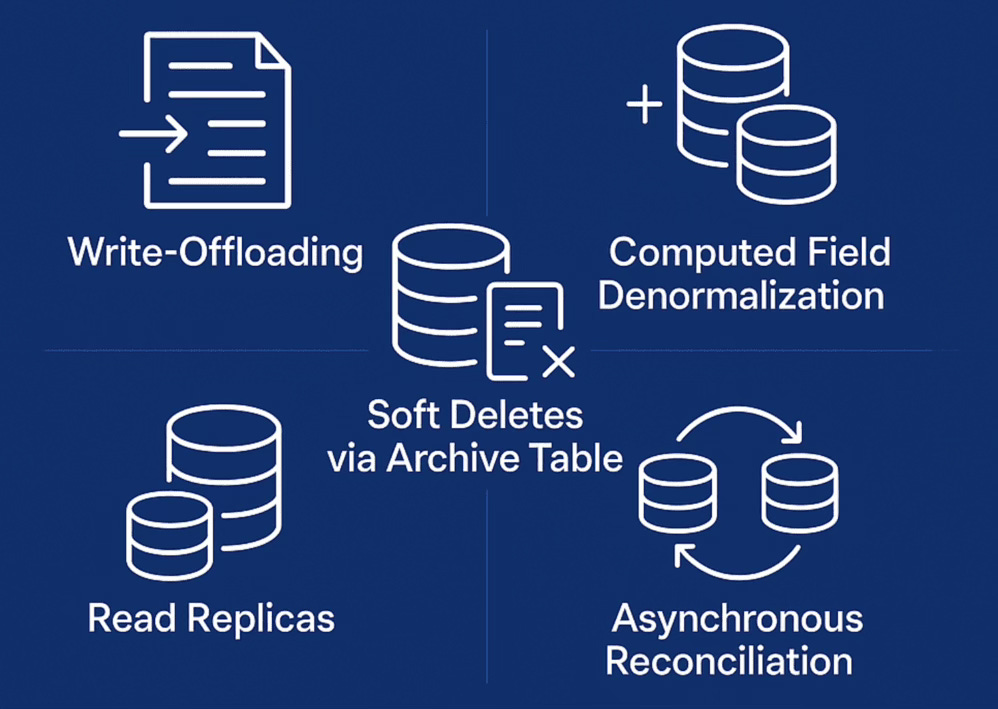

How to Reduce Load on Database in High-Traffic Backend Systems

Backend Strategies That Actually Scale

If your backend system is starting to slow down or your database feels like a bottleneck, you’re not alone. Scaling traffic exposes inefficiencies fast. In this article, we’ll look at some techniques to reduce database load without relying only on caching. These are practical, real-world patterns that work.

1) Write-Offloading: Reduce DB Load by Not Writing Immediately

The Common (Inefficient) Pattern:

Let’s say you’re building a backend for an e-commerce application.

Every time:

A user visits a product page,

Adds an item to the cart,

Clicks a button,

Or logs in…

You’re tracking these actions as analytics events like:

{ "user_id": 123, "event": "add_to_cart", "timestamp": "..." }What most teams do?

They INSERT this event directly into the database, every single time the action occurs.

So now, you have:

Thousands of tiny inserts per minute

No batching

Constant disk I/O

Table bloat

Increased DB write amplification

Over time, this chokes your primary DB, especially if you mix this low-priority logging with core data (like orders or users).

The Better Approach: Write-Offloading

Write-offloading means temporarily offloading write operations (that don’t need to be immediately persisted) to a buffer (like a queue or in-memory store), and flushing them later in batches.

1. Push events into a queue (e.g., Kafka, RabbitMQ, Redis Streams)

When the user triggers an action:

POST /track_eventInstead of writing directly to the DB, you:

Push the event to a Kafka topic (or any queue).

Return a 200 OK instantly.

This removes DB writes from your main app flow.

2. Consume and batch write from the queue

Set up a background consumer:

Reads 1000 events every 5 seconds.

Inserts them in bulk into the DB (single insert with

VALUES (...), (...), ...).

Why Is This Better?

Improves latency : Client doesn’t wait for DB writes. Instant response.

Reduces DB stress : 1000 batched inserts use less IOPS than 1000 separate inserts.

Retry / resilience : If the DB is down, your queue still collects events. Nothing is lost.

Scales better : Your DB won’t choke under high-traffic logging or low-priority writes.

Separation of concerns : Critical app logic and low-priority writes are decoupled. Easier to debug, retry, and scale individually.

Some real world use cases are Web click tracking, Email/sms logs, Inventory sync from external APIs, Game user actions etc

When NOT to Use Write-Offloading

For critical writes that must be persisted immediately (e.g., payments, user registration).

When you can’t tolerate even a few seconds delay in availability of data.

2) Read Replicas with Role-Based Routing: Don’t Let Reads Starve Your Writes

The Problem Most Backends Hit Eventually

You’ve built an awesome product. Users are growing. Everything is great… until your database starts choking.

Here’s what’s happening:

Every read (get user, get product, show dashboard) → hits the DB

Every write (signup, place order, update profile) → also hits the same DB

All this on a single primary database. Soon:

Reads slow down

Writes get queued

Lock contention increases

Latency spikes everywhere

You start throwing money at RDS/CloudSQL to scale vertically… but it only delays the inevitable.

Reads and writes have different requirements.

Writes need consistency

Reads need availability and speed

But using the same DB for both means your writes suffer when reads go crazy — and vice versa.

The Smarter Setup: Read Replicas with Role-Based Routing

What Are Read Replicas?

They’re read-only copies of your primary DB that:

Sync changes from the master (usually with a few seconds lag)

Handle SELECT queries, but not INSERT/UPDATE/DELETE

What Is Role-Based Routing?

It means you:

Route writes (e.g., creating users, updating orders) → primary DB

Route reads (e.g., viewing profiles, browsing items) → replica DBs

You explicitly control which queries go where, based on their role: read or write.

How It Looks in Practice

Let’s say you have:

primary-db.yourcompany.comreplica-db-1.yourcompany.comreplica-db-2.yourcompany.com

In your code:

if (isReadQuery(request)) {

db = getReadReplica(); // Round-robin between replicas

} else {

db = getPrimary();

}Why This Is Better

Separates concerns : Reads don’t interfere with write-heavy flows

Improves performance : Distributes read traffic across multiple DBs

Reduces load on primary : Makes writes more reliable and low-latency

Easier to scale reads : Just add more replicas

Works with caching : Combine with Redis for even better read performance

When You Must Read from Primary

Immediately after a write (e.g., show user what they just posted)

Transactions that require read-after-write consistency

Auth flows, payments, anything critical

3) Soft Deletes via Archive Table: Keep Your DB Lean Without Losing Data

The Typical “Safe” Approach: Soft Deletes

Most developers, rightly cautious, avoid hard-deleting records from production databases.

So what do they do?

They add a column like:

is_deleted BOOLEAN DEFAULT FALSEAnd every delete becomes:

UPDATE users SET is_deleted = TRUE WHERE id = 123;Then modify every read query to add:

WHERE is_deleted = FALSESounds safe, right?

But This Doesn’t Scale

Let’s say you run a SaaS product with:

20M users (5M deleted)

100M messages (30M deleted)

10M invoices (2M deleted)

Even with indexes:

Your

SELECTperformance drops (indexes grow larger)Table scans get expensive

Deleted rows clog analytics queries

ORM queries miss the

is_deletedfilter (bugs)

It’s safe but slow and messy.

The Smarter Alternative: Soft Deletes via Archive Table

Instead of flagging deleted rows in the main table, move them to a separate archive table.

So instead of this:

UPDATE users SET is_deleted = TRUE WHERE id = 123;You do this:

INSERT INTO users_archive SELECT * FROM users WHERE id = 123;

DELETE FROM users WHERE id = 123;You still keep the data — but outside the hot path of your main application.

So main table has active records only and archive table has deleted records (for occasional audit or debugging later).

Pro Tip: Use a Batch Job or Trigger

If real-time delete-move is risky, do it in batches:

Mark as

pending_deleteRun a cron every 10 mins:

INSERT INTO archive SELECT * FROM main WHERE pending_delete = true;

DELETE FROM main WHERE pending_delete = true;Or use a DB trigger to automate it on delete.

When NOT to Use Archive Tables

If the data is truly non-recoverable and deletion is permanent (e.g., security-related purges)

If regulatory compliance demands actual deletion (e.g., GDPR “right to be forgotten”)

In those cases: delete, log, and move on.

4) Asynchronous Reconciliation: When Immediate Consistency Isn’t Worth the Cost

Let’s say you’re building a social media app.

A user hits “Like” on a post. You want to:

Show the updated like count

Save that action for analytics

Update the user’s interaction history

Many teams make this mistake:

POST /like

↓

1. Insert into likes table

2. Update post's likes_count

3. Insert into analytics

4. Return 200 OKAll in one synchronous request.

Why That Hurts

This “do-it-all-now” strategy:

Slows down the user experience

Ties UI responsiveness to DB performance

Increases chances of partial failure (1 success + 1 failure = data inconsistency)

Spikes DB load during high traffic (likes, views, votes)

The cost of being immediately consistent is high — and often not worth it.

The Better Approach: Asynchronous Reconciliation

You accept the action right away, defer the heavy stuff, and reconcile the state later — safely and in the background.

It’s “eventual consistency” by design — but focused on efficiency, UX, and decoupling.

How It Works

User clicks “Like”

Server immediately:

Records the action in a log/queue

Returns 200 OK instantly

3. A background job (consumer/cron) picks it up later:

Inserts into

likestableUpdates the

likes_countLogs the event to analytics

Benefits

Faster user experience : No waiting for DB writes

Reduced DB load : Batch writes instead of real-time

Retry-friendly : If something fails, just retry the background job

Decoupled logic : Main app flow doesn’t break if analytics is down

Better control : You can throttle background jobs without impacting users

Challenges (and Fixes)

Duplicate processing : Use idempotent jobs with unique keys

Delay in visible data : For critical updates, keep a temporary in-memory count

Harder to test : Add metrics + audit logs to reconcile and monitor jobs

Out-of-order events : Use timestamps or versioning to resolve order

Some other techniques reduce load on the database

Computed Field Denormalization

Concept: Pre-compute and store expensive derived data instead of calculating on read.

Example:

You need to show “total spending per user” on every dashboard load.

- Maintain a total_spent column that updates via a trigger or at write-time.

Avoids joins and aggregation on every read.

Selective Caching (Smarter than blanket caching)

Concept: Cache only what’s truly expensive and accessed frequently.

Example:

A product page might cache:

Price (short TTL)

Description (long TTL)

Inventory count (semi-live, backed by Redis with fallback to DB)

Avoids over-caching low-impact fields and lets you tune for hot paths.

Query Trimming

Concept: Reduce columns and joins to bare minimum for a given use case.

Example:

Your mobile API doesn’t need the entire User record - just name and profile pic.

- Create a lightweight query (or materialized view) just for this.

Let me know in comments if you want detailed explanation of these concepts as well.

Reducing database load isn’t about one magic fix -it’s about using the right strategies for the right problems.

These techniques help your system stay fast and reliable under real-world traffic. Start with one, and scale smarter.